AI Meets Search: Bridging Text and Image Retrieval with NLP and CLIP

In this blog, I’ll walk you through the design and implementation of a Système de Recherche d’Information — an intelligent platform capable of searching through documents using text and image similarity.

🔍 Overview of the System

The goal of this project was to create an intelligent system for information retrieval, leveraging state-of-the-art Natural Language Processing (NLP) models and an intuitive user interface.

The system provides two core functionalities:

- Text Search: Users can input queries to find academic documents with similar content.

- Image Search: Users can upload images to find visually or semantically related content.

Key Technologies Used:

- Streamlit: For the user interface and interaction.

- SentenceTransformers: To generate text embeddings.

- CLIP: For image embeddings and similarity matching.

- Scikit-learn: For cosine similarity calculations.

- JSON Database: For storing documents and embeddings.

⚙️ System Architecture

The architecture of the system follows a client-server model with the following components:

1. User Interface (Streamlit)

Streamlit serves as the user-friendly front-end, allowing users to:

- Input textual queries.

- Upload images for similarity-based search.

- View results interactively.

2. JSON Database

Academic documents (text and images) are stored as JSON entries. This includes:

- Document titles and content.

- Precomputed embeddings for both text and images.

3. NLP and Image Models

- SentenceTransformers: Converts text into dense vector embeddings.

- CLIP: Processes images and generates embeddings that align with text embeddings in a shared space.

4. Search Engine

Using cosine similarity, the system matches user inputs (text or image embeddings) with stored document embeddings to retrieve the most relevant results.

🛠️ Implementation Details

1. Generating and Storing Embeddings

Each document in the system is processed to generate embeddings, which are then saved in a JSON file for quick retrieval.

Example Code for Text Embeddings

from sentence_transformers import SentenceTransformer

import json

# Load the SentenceTransformer model

model = SentenceTransformer('paraphrase-MiniLM-L6-v2')

# Generate embeddings for each document

def generate_embeddings(documents):

embeddings = []

for doc in documents:

embedding = model.encode(doc["content"])

embeddings.append(embedding)

# Save embeddings to JSON

with open('embeddings.json', 'w') as file:

json.dump(embeddings, file)

# Example document

documents = [{"title": "AI Basics", "content": "An introduction to artificial intelligence."}]

generate_embeddings(documents)2. Performing a Search

For text-based searches, the system calculates the cosine similarity between the user query embedding and stored document embeddings.

Example Code for Text Search

from sklearn.metrics.pairwise import cosine_similarity

def search_text(query, embeddings, documents):

query_embedding = model.encode(query)

similarities = cosine_similarity([query_embedding], embeddings)

best_match_idx = similarities.argmax()

return documents[best_match_idx]For image-based searches, embeddings are generated using CLIP, and a similar process is followed.

3. User Feedback and Reinforcement Learning

To improve over time, the system collects user feedback (e.g., marking results as “relevant” or “not relevant”). This feedback can be incorporated into a reinforcement learning loop to refine the ranking of results.

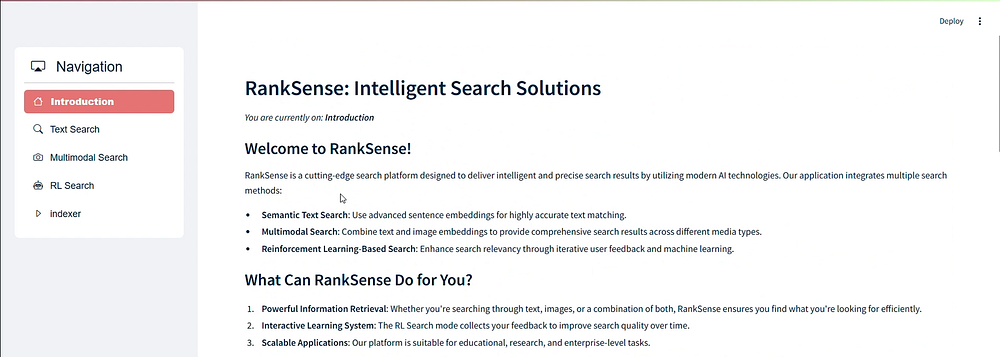

💻 Interface with Streamlit

Streamlit makes it easy to deploy an interactive web-based UI for the system.

Example Code for the UI

import streamlit as st

st.title("Intelligent Information Retrieval System")

query = st.text_input("Enter your query:")

if query:

result = search_text(query, embeddings, documents)

st.write(f"Title: {result['title']}")

st.write(f"Content: {result['content']}")Users can input queries, upload images, and see results directly on the interface.

🚀 Deployment and Scalability

1. Deployment with Streamlit

The system can be deployed locally or on the cloud using Streamlit Cloud or similar hosting services.

2. Scalability Enhancements

Future improvements could include:

- Expanding the dataset with more documents and images.

- Integrating advanced search algorithms or fine-tuned models.

- Using a more robust database (e.g., MongoDB) for handling larger datasets.

🌟 Conclusion and Future Directions

This project highlights the power of NLP and image embeddings in transforming information retrieval. By combining these technologies with user feedback, the system becomes more intuitive and efficient over time.

Potential future enhancements include:

- Implementing neural search methods for even better retrieval accuracy.

- Supporting multilingual queries for global accessibility.

- Exploring large-scale deployment strategies for real-world applications.

Let me know your thoughts or questions about this project! Feel free to connect with me if you’d like to collaborate or discuss similar ideas.

👉 LinkedIn: mohannad-tazi

Check out more updates and projects on my website: